Abstract

Reconstructing dynamic 3D scenes from 2D images and generating diverse views over time is challenging due to

scene complexity and temporal dynamics. Despite advancements in neural implicit models, limitations persist:

(i) Inadequate Scene Structure: Existing methods struggle to reveal the spatial and temporal structure of dynamic scenes

from directly learning the complex 6D plenoptic function. (ii) Scaling Deformation Modeling:

Explicitly modeling scene element deformation becomes impractical for complex dynamics. To address these issues,

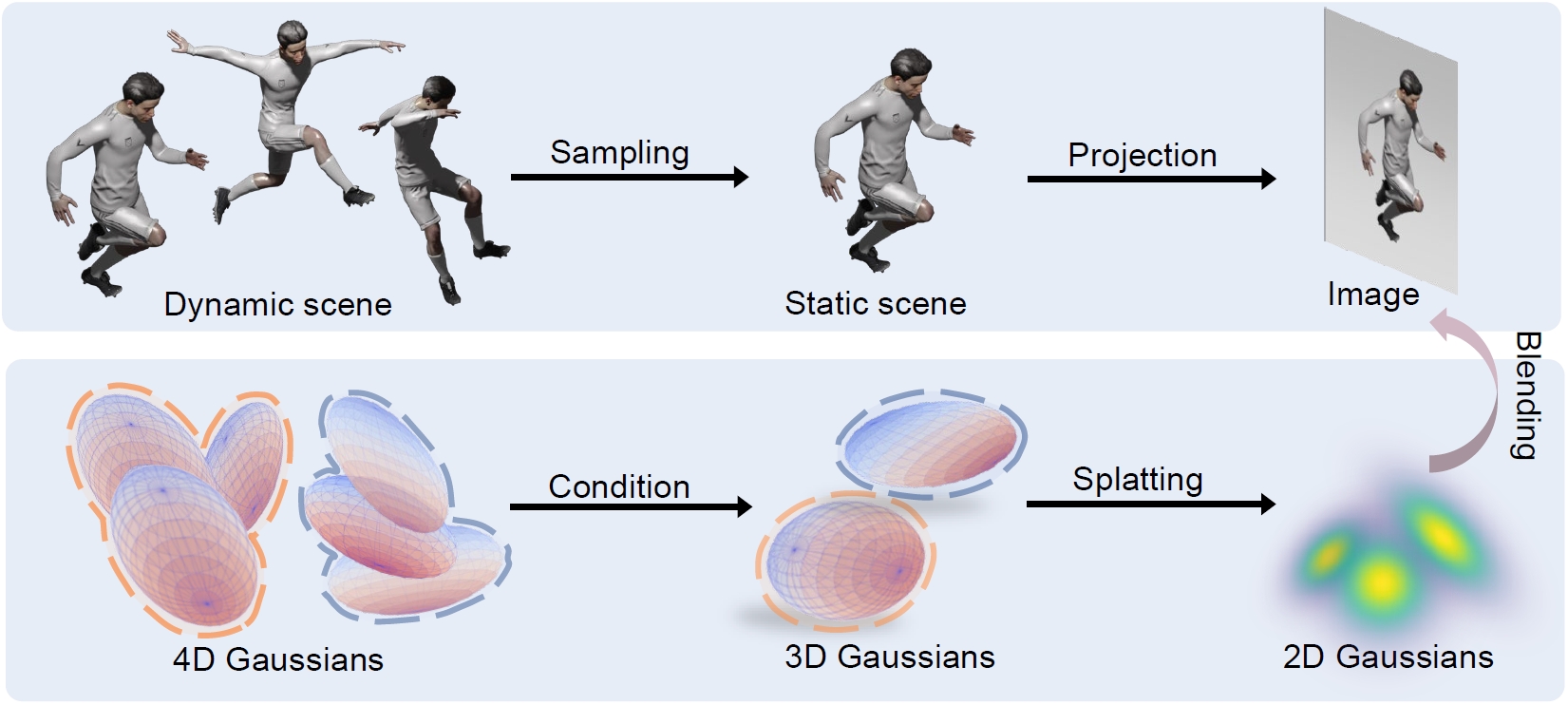

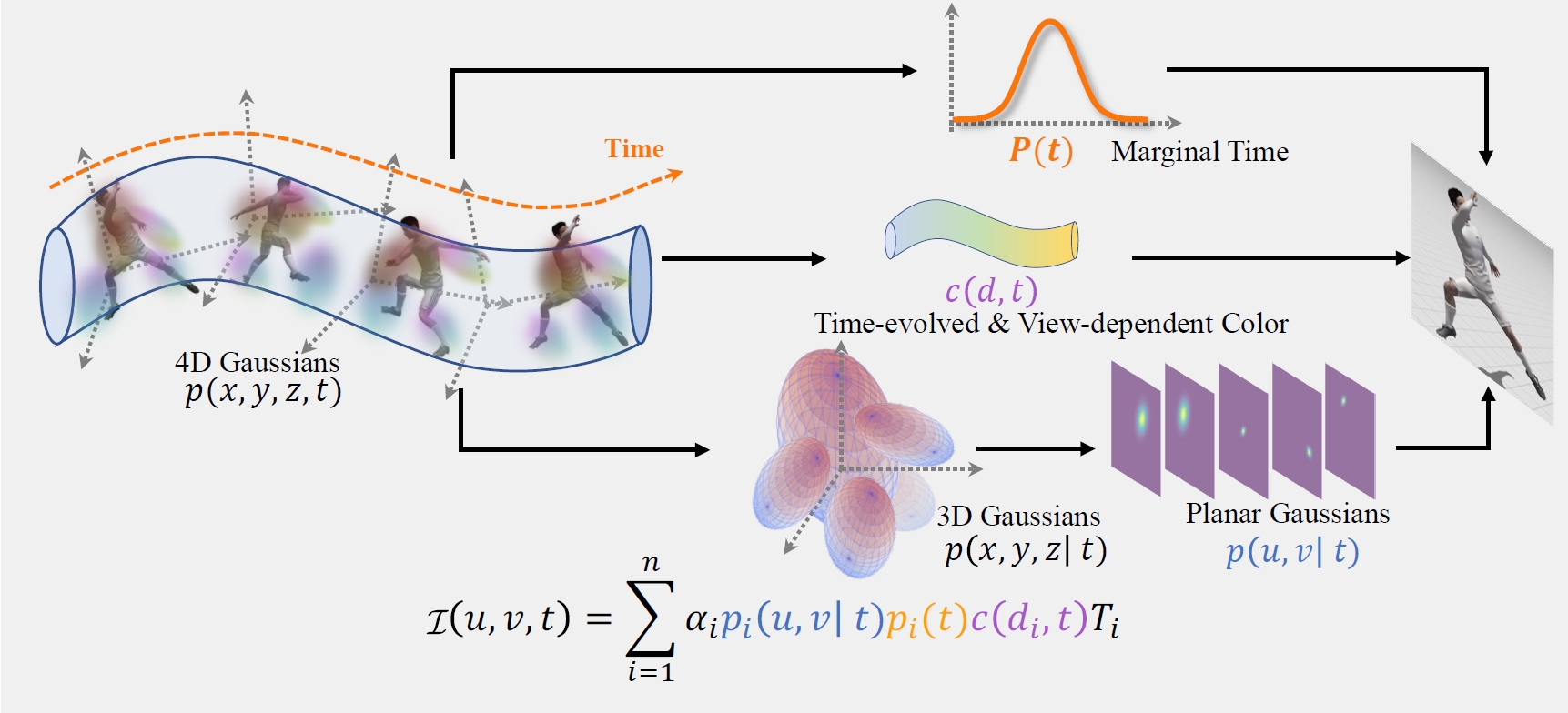

we consider the spacetime as an entirety and propose to approximate the underlying spatio-temporal 4D volume of a dynamic scene

by optimizing a collection of 4D primitives, with explicit geometry and appearance modeling.

Learning to optimize the 4D primitives enables us to synthesize novel views at any desired time with our tailored rendering routine.

Our model is conceptually simple, consisting of a 4D Gaussian parameterized by anisotropic ellipses that

can rotate arbitrarily in space and time, as well as view-dependent and time-evolved appearance represented by the

coefficient of 4D spherindrical harmonics. This approach offers simplicity, flexibility for variable-length video and

end-to-end training, and efficient real-time rendering, making it suitable for capturing complex dynamic scene motions.

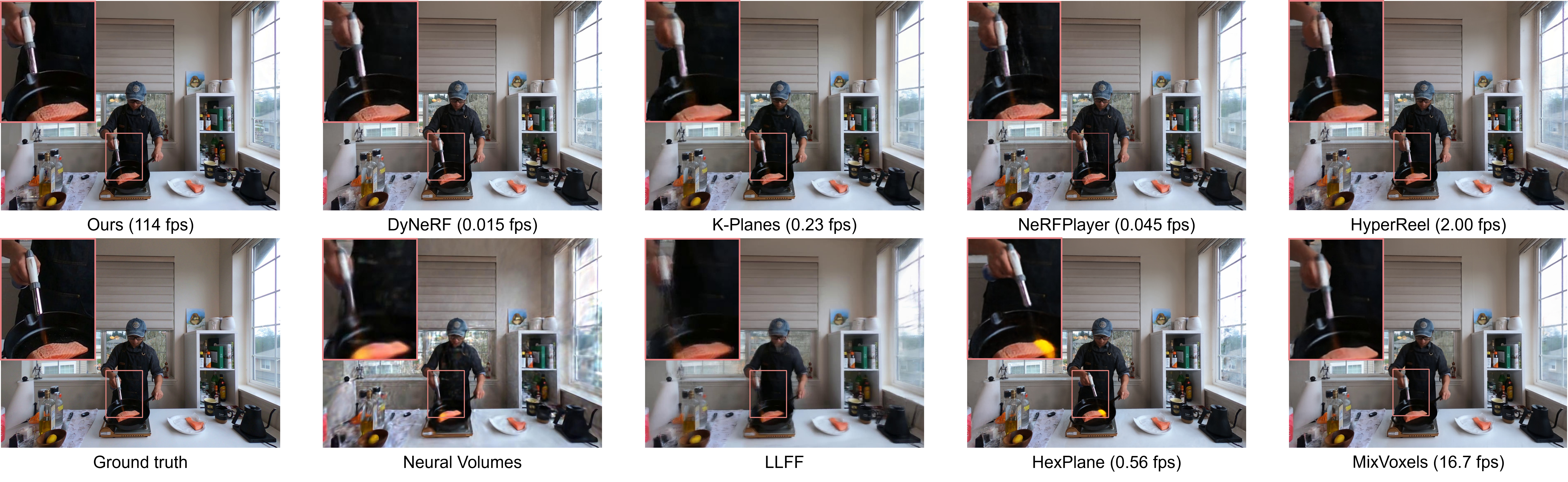

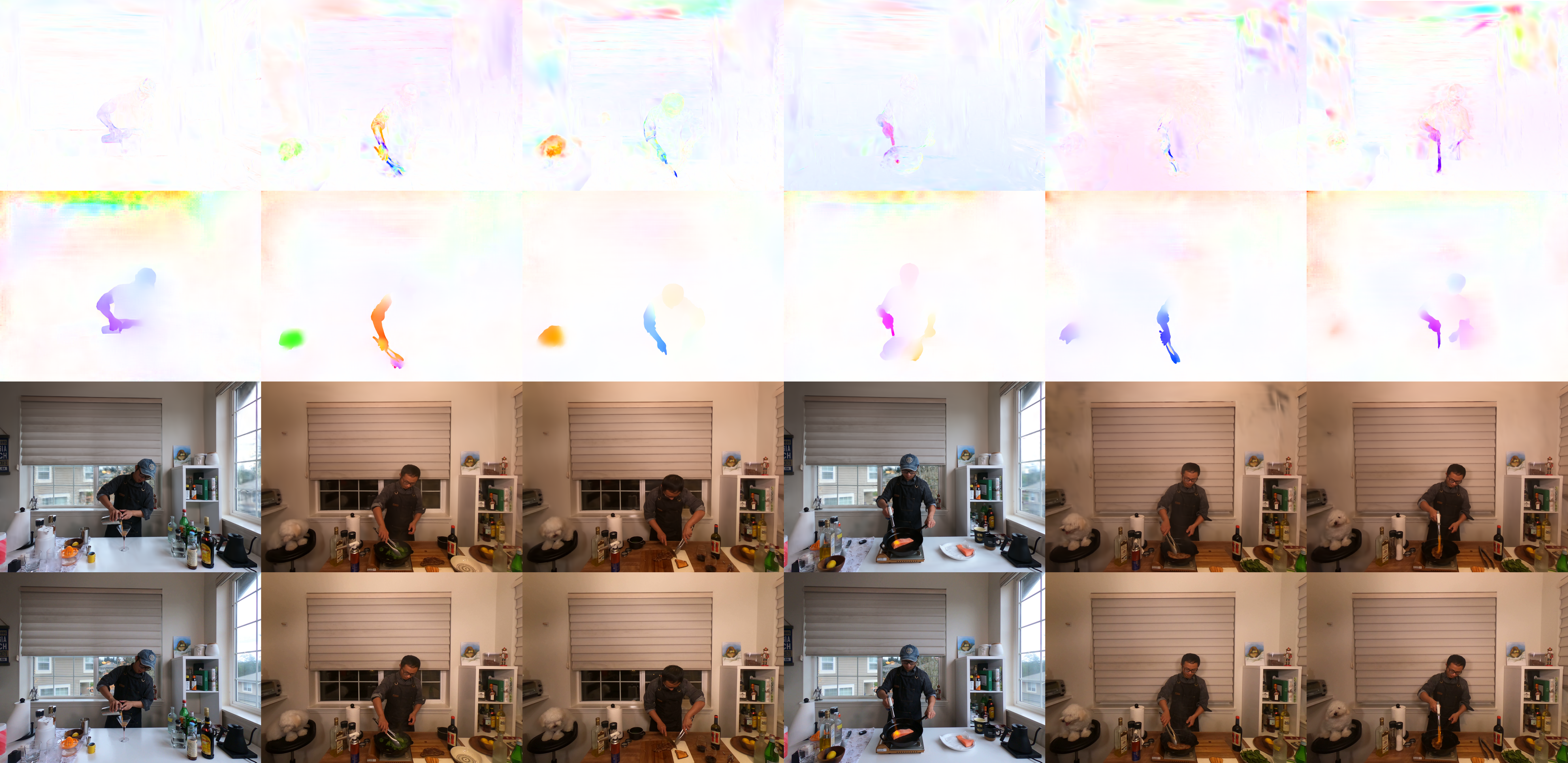

Experiments across various benchmarks, including monocular and multi-view scenarios,

demonstrate our 4DGS model's superior visual quality and efficiency.